Cloud computing, outsourced support models and microservice development practices are fast becoming mainstream options when delivering IT for financial services. However, all of these advances create a gap between the business and the systems that underpin their services.

This comes down to a number of factors:

- A lack of infrastructure engineering knowledge on the part of the client, with infrastructure support being entirely delegated to the cloud supplier

- System and application knowledge being unavailable via third party support, due to the expectation that runbooks and documented recovery procedures will be in place instead

- The development of disparate components reduces levels of system-wide developer knowledge as each component is delivered as a standalone element, and contract compliance means that no upstream or downstream knowledge is required.

These combine to introduce and/or exacerbate risks to continuity and resilience through the loss of direct operational knowledge and accountability.

Can autonomic systems successfully fill the gap?

Borrowing heavily from the concept of the human nervous system, an autonomic system is a complex system that can be efficiently and successfully managed through internal monitors and actions rather than external intervention.

Autonomic systems are not a new concept, being a well-understood solution to accessing and performing maintenance and repairs in the face of geographic and environmental restrictions – for instance, for a datacentre in Antarctica or a robot on Mars.

IBM provided a classic breakdown of such autonomous characteristics across four distinct areas:

- Self-configuration. The ability of a system to adapt independently to changes in its environment, such as the loss of a primary science instrument where another on board instrument may need to be used. Power capacity in cells may be reducing, requiring that unnecessary functions shut down to alleviate load.

- Self-healing. The capability to detect malfunctions and adapt without manual intervention. This is illustrated in the event of damage, where damage needs to be remediated through the selection of materials that seal, or the redundancy in software to loss of supporting components

- Self-optimisation. Autonomous and continuous modifications to reflect the operating environment to elicit performance and cost benefits. Efficient use of power by adapting to the local environment and learning where best to focus operations increases capability.

- Self-protection: Detecting and mitigation of security threats in real time. A solar flare, for example, could result in a system entering sleep mode or a dust storm resulting in moving to a more protected area.

Today, system reliability engineering – where operation and support of an IT system is achieved through scripting and automation, has brought autonomic computing to the forefront of software development to address the lack of direct access, as the advent of Cloud Computing has taken hold.

How can autonomic systems provide value?

In the context of financial services, autonomic systems offer two main benefits:

- Slow down the rising cost of IT management – The costs associated with supporting modern IT ecosystems are no longer managed as capital expenditure. As technology becomes a disposable commodity, and local methods of procurement and management more prevalent (investment model vs consumption model), adding, scaling and managing technology has the potential to be exponentially more expensive – greater flexibility results in a model where expenditure is retrospective (in the shape of cloud providers’ regular invoices) rather than managed and forecast through rigorous cost control and governance.

Developing automated processes to manage the availability, costs, scaling, and day-to-day management of technology will be crucial to mitigate the soaring costs of supporting such complex and quickly moving environments. This is achieved through the autonomic mechanisms by ensuring the software itself determines its most optimised level (shutting down unneeded nodes, for example) and self-healing reduces the need to provision redundant capacity to deal with failure (nodes can be shut down and replaced on failure).

- Lower Total Cost of Ownership – The prospect of an adaptive system that can be left to run and manage itself is an attractive one for most businesses that rely on IT processing to be efficient, safe, and reliable: only running those resources .·needed to fulfil real-time load requirements, ensuring that failures are tolerated and resolved at a micro level, and offering manual support intervention that is restricted to a small fraction of faults, lowering your cost of operations.

However, fully meeting the requirements for a fully autonomous system will incur upfront design and architecture costs that must be considered in the context of the benefits that would be realised when measured against alternative approaches to providing a capability that matches the business requirements, especially with regard to costs.

Autonomy – but how much?

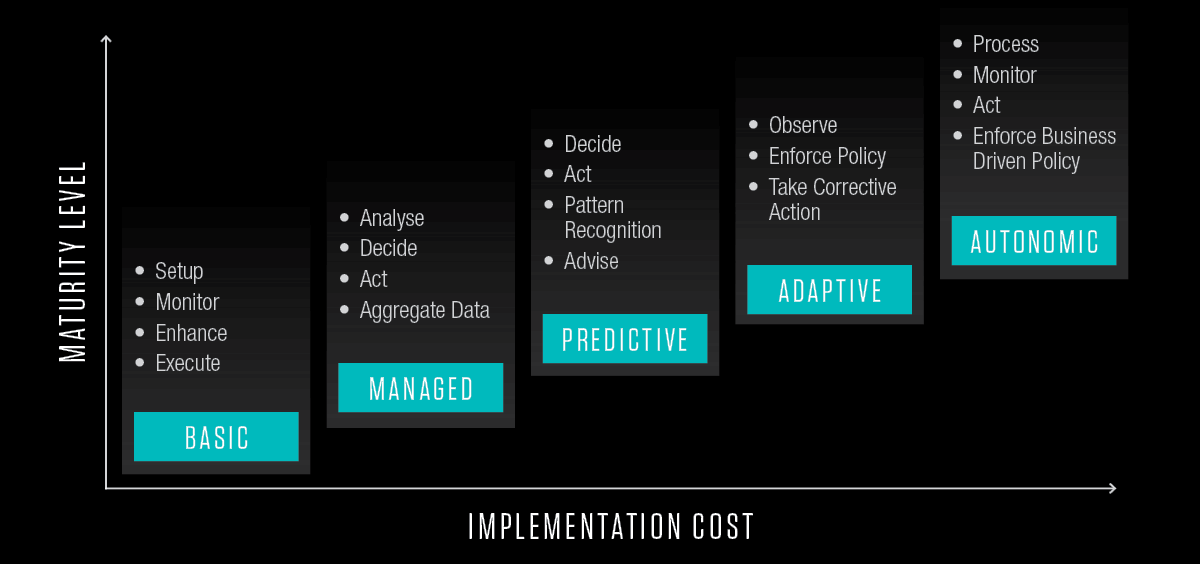

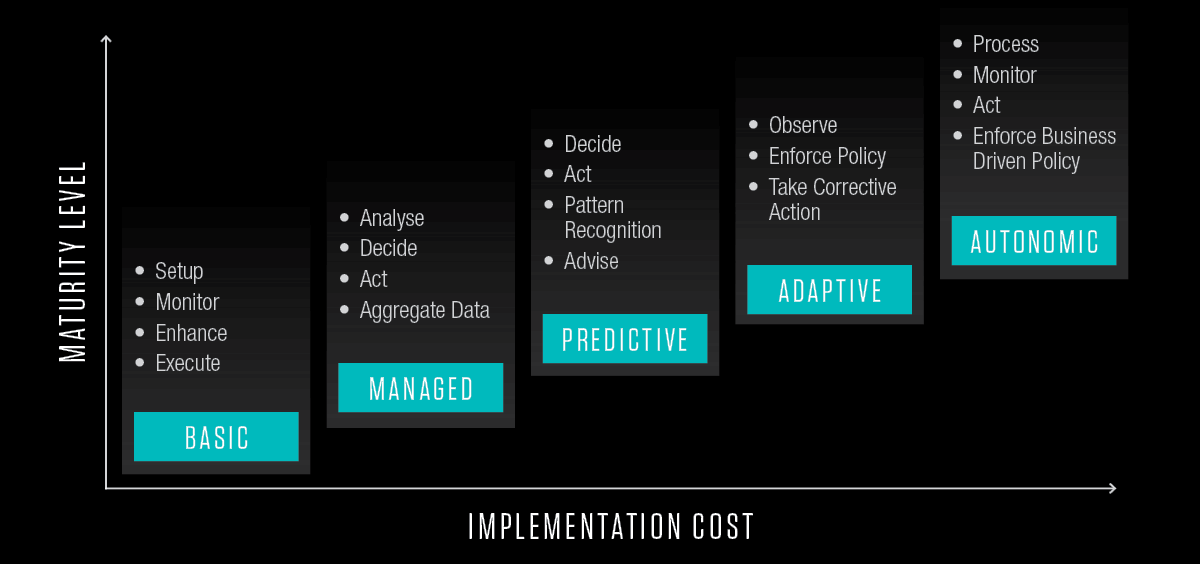

Autonomic system capabilities can be defined via a maturity scale, as depicted in the figure below, that represents the progression from standard logging, monitoring and action to fully autonomic status. These can also be viewed as target levels, where an appropriate endpoint is determined based on the specific system requirement. These requirements are measured against the most important business factors. For example, a predictive level may deliver the fully considered business requirement with the benefits of further progression being outweighed by the costs.

Legacy systems are generally managed at a Basic Maturity Level, which consists of basic automation tools like BPM supplemented by manual instrumentation and workflow.

Current systems have a more managed application, with process mining, machine learning and robotics using vendor tools such as Dynatrace, Splunk and emerging offerings playing a major role in the processes.

Cognitive systems, neural networks with contextual decisions being made automatically, are available as technology choices to reach a more predictive level.

Artificial Intelligence is progressing rapidly, with the expectation that non-sentient automation will become a commodity offering soon.

And not forgetting open source…

The Open Source software community is a rapidly changing environment upon which a high number of today’s technologies are developed and adopted as part of the business communities’ strategic IT platforms. While this approach delivers massive innovation at a pace that would be impossible to achieve individually, it also demands an acceptance that change is frequent and adaptation is an ongoing requirement.

In summary

Selecting the correct level of autonomy is a key aspect to realising value: aiming too high can result in an unacceptable overhead at the development stage and not be necessary to meet actual business resiliency requirements. If care is taken at the definition stage to identify a balanced approach, that development investment can be managed to deliver the optimum cost benefit from the approach.

There are of course some applications of this methodology that would benefit from a comprehensive autonomic approach, with the pragmatic position for most organisations being more of a hybrid combination of individual component level autonomy with wider Hyperautomation (a business-prioritised philosophy to accelerate the automation of as many business and IT processes as possible) to better manage the overall ecosystem. This is likely to be the most cost-effective way to engage with the goal of simplifying and reducing the costs of complex system management while also achieving the aims of autonomic systems philosophy.

We hope that you have enjoyed reading this installment of Capco’s 2023 Tech Trends series. Written by our in-house practitioners, the series covers composable applications, cybersecurity mesh, data fabrics and autonomic systems – topics that are both relevant and additive when addressing a number of the key business and technology challenges that the financial services industry is now facing.